OpenAI GPT-4o is ?remarkably human', live demos reveal what it can do- All details

1 month ago | 25 Views

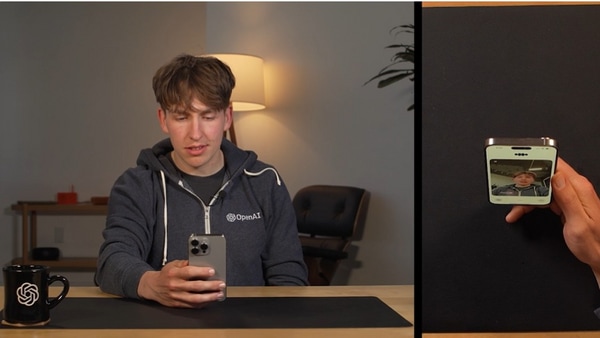

OpenAI has introduced GPT-4o, the latest and most sophisticated iteration of its AI model, designed to make digital interactions feel remarkably human. This new update aims to enhance the user experience significantly, bringing advanced capabilities to a broader audience.

Enhanced Interactivity with Voice Mode

During the announcement, OpenAI's team demonstrated GPT-4o's new Voice Mode, which promises a more natural and human-like conversational ability. The demo showcased the chatbot's capacity to handle interruptions and modify responses in real-time, highlighting its improved interactivity.

CTO Mira Murati emphasised the model's accessibility, noting that GPT-4o extends the power of GPT-4 to all users, including those on the free tier. In a livestream presentation, Murati described GPT-4o, with the "o" standing for "Omni," as a major advancement in user-friendliness and speed.

Impressive Demonstrations

The demonstrations included a variety of impressive features. For instance, ChatGPT's voice assistant responded quickly and could be interrupted without losing coherence, showcasing its potential to revolutionise AI-driven interactions. One demo involved a real-time tutorial on deep breathing, illustrating practical guidance applications.

GPT-4o: Multiple Voice and Problem Solving Features

Another highlight was ChatGPT's ability to read an AI-generated story in multiple voices, from dramatic to robotic, even singing. Additionally, ChatGPT's problem-solving skills were on display as it helped a user through an algebra equation interactively, rather than just providing the answer.

GPT-4o: Be My Eyes Feature

In a particularly notable demonstration, termed "Be My Eyes," GPT-4o described cityscapes and surroundings in real-time, offering accurate assistance to visually impaired individuals. This feature could be a game-changer for accessibility.

GPT-4o's Multimodal Abilities and Language Translation

GPT-4o also showcased enhanced personality and conversational abilities compared to previous versions. It seamlessly switched between languages, providing real-time translations between English and Italian, and utilised a phone's camera to read written notes and interpret emotions.

The launch of GPT-4o coincides with Google's upcoming I/O developer conference, where further advancements in generative AI are anticipated. OpenAI also announced a desktop version of ChatGPT for Mac users, with a Windows version to follow. Initially, access will roll out to paid users.

Moreover, OpenAI plans to provide free users with access to custom GPTs and its GPT store, with these features being phased in over the coming weeks. The rollout of GPT-4o's text and image capabilities has begun for paid ChatGPT Plus and Team users, with Enterprise user access on the horizon. Free users will also gain access gradually, subject to rate limits.

Upcoming Features

The voice version of GPT-4o is set to launch soon, enhancing its utility beyond text interactions. Developers can look forward to using GPT-4o's text and vision modes, with audio and video capabilities expected to be available to a select group of trusted partners shortly.

Read Also: gpt-4o is insane: netizens react to the most powerful ai model from openai