OmniHuman-1: ByteDance’s Leap into AI-Powered Hyper-Realistic Video Generation

2 months ago | 5 Views

ByteDance, the parent organization of TikTok, has unveiled a new artificial intelligence model named OmniHuman-1. This innovative model is engineered to create realistic videos utilizing photographs and audio clips. This development comes in the wake of OpenAI's initiative to broaden access to its video-generation tool, Sora, for ChatGPT Plus and Pro users in December 2024. Additionally, Google DeepMind revealed its Veo model last year, which is capable of generating high-definition videos from text or image inputs. However, it is important to note that neither OpenAI's nor Google's models, which transform photos into videos, are available for public use.

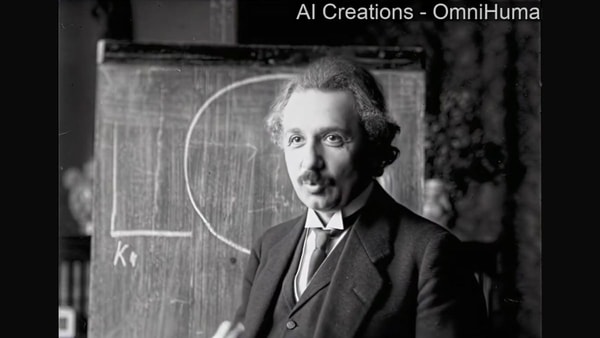

A technical paper, reviewed by the South China Morning Post, indicates that OmniHuman-1 is particularly adept at producing videos of individuals who are speaking, singing, and moving. The research team asserts that the model's performance exceeds that of current AI tools that generate human videos based on audio inputs. Although ByteDance has yet to make the model publicly accessible, sample videos have emerged online, including a notable 23-second clip of Albert Einstein seemingly delivering a speech, which has gained traction on YouTube.

Insights from ByteDance Researchers

Researchers at ByteDance, including Lin Gaojie, Jiang Jianwen, Yang Jiaqi, Zheng Zerong, and Liang Chao, have elaborated on their methodology in a recent technical paper. They have proposed a training technique that amalgamates various datasets, integrating text, audio, and movement to enhance video-generation models. This approach aims to tackle scalability issues that have hindered the progress of similar AI technologies.

The research underscores that this technique improves video generation without making direct comparisons to competing models. By blending diverse data types, the AI is capable of producing videos with different aspect ratios and body proportions, ranging from close-ups to full-body representations. The model generates intricate facial expressions that are synchronized with audio, along with natural head movements and gestures. These capabilities may pave the way for wider applications across multiple industries.

One of the sample videos that has been released showcases an individual presenting a speech in a manner akin to a TED Talk, complete with synchronized hand gestures and lip movements that align with the audio. Viewers have remarked that the video bears a striking resemblance to an actual live recording.

Read Also: Alexa Gets Smarter: Amazon’s AI-Powered Upgrade Arrives February 26

Get the latest Bollywood entertainment news, trending celebrity news, latest celebrity news, new movie reviews, latest entertainment news, latest Bollywood news, and Bollywood celebrity fashion & style updates!